Wed Oct 22 - Written by: Brendan McNulty

Week 43: Building an AI purchase intent predictor

Week 43: Building an AI purchase intent predictor

(that’s helpful but won’t replace real customers)

The Experiment

I came across this academic paper where researchers got LLMs to predict purchase intent with 90% accuracy. Their trick? Instead of asking AI for a direct 1-5 rating (which produces garbage), they got it to write natural responses about buying likelihood, then mapped those to scores using “semantic similarity.”

The potential was obvious: predict how customers react to landing pages before burning ad spend. So I built a purchase intent evaluator for a specific product page I was optimizing.

The Process

The whole build took about 4 hours. Here’s what I did:

Compiled the evidence base: Gathered everything about actual customers - webinar transcripts, Q&A sessions, case studies, page analytics, support tickets. Quality over quantity here - deep, specific context beats generic personas every time.

Adapted the SSR framework: The paper’s 5-point scale needed customization for my context:

- 1 (Definitely not): Feels complex, enterprise-level, or irrelevant

- 2 (Probably not): Still unclear what’s included or how setup works

- 3 (Unsure): Useful but missing clarity on price, fit, or setup

- 4 (Probably yes): Clear fit for target market, quick setup visible

- 5 (Definitely yes): Obvious value, one price, feels “made for me”

Created weighted dimensions: Based on evidence, I scored 7 factors: clarity, relevance, communication quality, trust signals, ease/setup clarity, motivation cues, and visual empathy.

Built with Claude + ChatGPT: Used Claude for framework brainstorming, ChatGPT for the actual build. Fed it all context docs with strict boundaries - no inventing data, no generic best practices, only work within the evidence.

The Outcome

The evaluator produced surprisingly nuanced diagnostics. Testing different hero variants revealed patterns humans might miss:

One variant scored 3.7/5 with clear friction points: the headline conveyed confidence but didn’t clarify that the system. No setup simplicity mentioned above the fold. The tool flagged that adding a single line like “Set up in 5 minutes” could bump the score significantly.

The structured output format proved genuinely useful:

- Section-by-section scoring (clarity: 3, trust: 4.5, etc.)

- Specific friction points (“Product scope unclear - users can’t instantly tell what’s included”)

- Missing motivations (“Save time and avoid admin” wasn’t addressed)

- Single high-leverage fix with evidence-based rationale

It consistently caught when pricing was buried, setup process unclear, or messaging too tech-centric for everyday merchants. The recommendations aligned well with known conversion drivers from the research data.

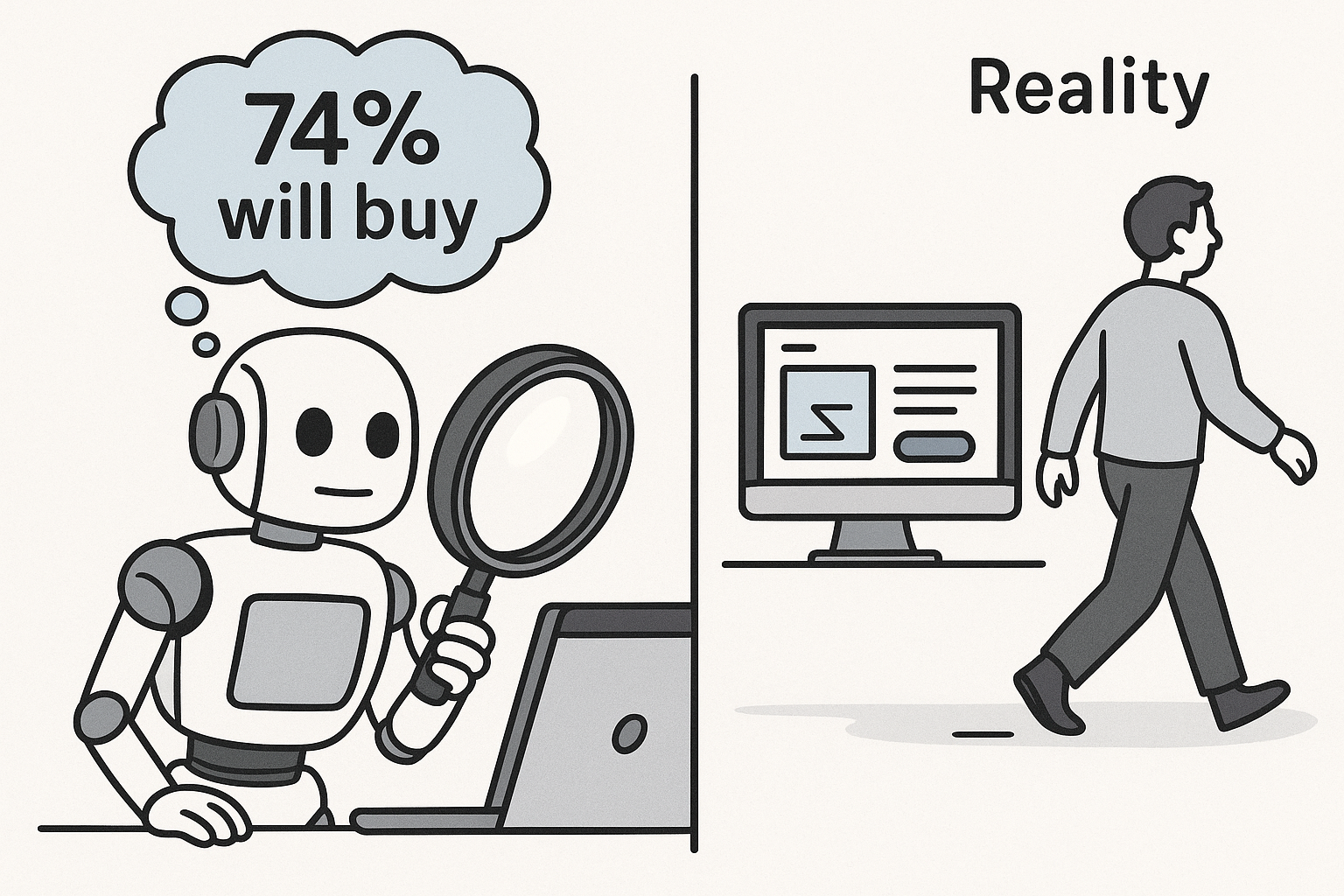

But here’s the reality: This is a first-pass diagnostic tool. It’s analyzing based on patterns from customer research and the SSR framework, but it can’t predict actual human behavior. Someone might love everything about your page but not buy because their cousin recommends a competitor. Or hate the design but convert because the price is right.

Key Takeaway

This tool is valuable as a systematic first-pass filter. It catches obvious friction, ensures key messaging hits, and can review hundreds of variations in minutes. It’s particularly good at flagging clarity issues and missing trust signals.

But here’s the thing: humans are terrible predictors of their own behavior, and AI simulating humans inherits this flaw. The tool tells you if your page clearly communicates value. It can’t tell you if someone will actually buy. Real conversion involves timing, emotional state, competitive context, and that “feels right” moment no model captures.

Pro Tips for Building Your Own

- Context depth matters: Feed it real customer conversations, not personas. Support tickets, reviews, as well as demographic data.

- Stay specific: Don’t build a universal evaluator. Build for your exact product, audience, and market context.

- Use for evaluation of design: Let it suggest what to tweak, not what to ship. It’s a thinking partner, not a decision maker