Wed Nov 19 - Written by: Brendan McNulty

Week 47: Building an AI experiment generator

Week 47: Building an AI experiment generator

(that I should have built 51 weeks ago)

The Experiment

I had free credits for Claude Code ($250 worth) and a slightly embarrassing realization: I’ve been manually brainstorming newsletter ideas for almost an entire year. What if I could build a tool that generates, validates, and plans AI experiments for me?

Here I am, near the end of a 52-week project, using my brain like a savage and only now finally building the tool that could have helped me from week one.

My goal was to create an intelligent system with slash commands, specialized agents, automated hooks, and multiple output formats. Basically, build something that could do in seconds what takes me hours of staring at a blank page wondering “what should I try next?”

The Process

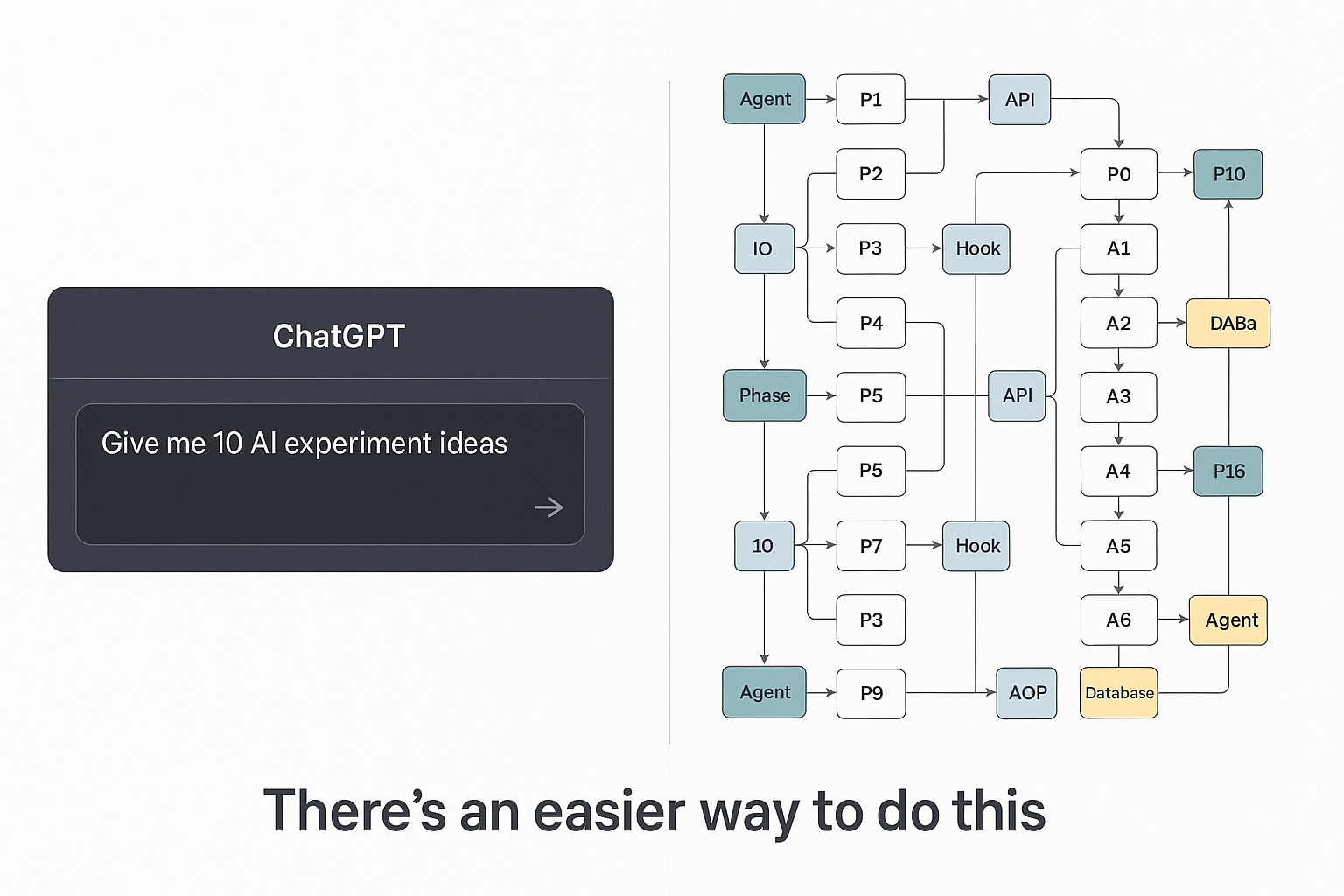

This turned into a 30-step marathon across 10 phases. Here’s what that looked like:

Phase 1-2: Foundation & Commands (Steps 1-7) Set up the project structure, installed dependencies, and built slash commands like /brainstorm, /validate, /research, and /outline. Each command would do something specific—generate ideas, check feasibility, find similar work.

Phase 3-4: Sub-Agents & Automation (Steps 8-13) Created specialized AI agents (idea generator, validator, planner) that work together. Added automated hooks for things like auto-research and auto-tagging. This is where it started feeling complex.

Phase 5-7: Skills & Advanced Features (Steps 14-20) Built a skill framework for loading expertise modules, created different output formats (newsletter, social, technical), added export options (markdown, JSON, PDF). At this point, I was following Claude Code’s instructions without fully understanding what I was building.

Phase 8-10: Integration & Polish (Steps 21-30) Connected external APIs (GitHub, Reddit, Google Trends), built a web interface, added analytics, created a template library, and tested everything.

The whole thing took a couple of days of evening and weekend work. I spent $44 of my $250 free credits on this project.

Then came the testing phase.

I tried to use it and immediately hit HTTP 500 errors. The CLI wouldn’t connect. The browser showed “Marinating…” indefinitely. This wasn’t a me problem—it was Claude’s servers having issues. I thought it was related to the Cloudflare outage, but the errors persisted long after that was resolved.

After a day of being stuck in this loop, I had to upload all my local files to GitHub and essentially start fresh just to see if the thing actually worked.

When I finally got it running, here’s what it can do:

Available Commands:

/brainstorm [category]- Generate ideas for NLP, vision, reinforcement learning, multimodal/random- Generate creative/unconventional experiment ideas/validate [idea]- Check feasibility/research [topic]- Find related research/outline [idea]- Create detailed experiment plan/estimate [idea]- Estimate time and resources needed/trends- Show hot AI topics/combine [id1] [id2]- Merge two ideas into something new

I tested /brainstorm and it generated ideas like:

- AI-Powered Scientific Hypothesis Generator (analyze research papers to generate testable hypotheses)

- Personalized Learning Path Optimizer (optimize learning sequences based on progress patterns)

- Bias Detection in AI Training Data (identify and quantify dataset biases)

These are… fine. They’re decent ideas.

The Outcome

I built a fully-featured AI experiment generator with:

- Multiple specialized agents

- Automated workflows

- Database storage

- External API integrations

- Web interface

- Analytics tracking

- Multiple export formats

And after all that work and $44 in credits, I could have gotten similar (possibly better) results by asking ChatGPT: “Give me 10 AI experiment ideas for my newsletter.”

The tool works. It’s sophisticated. It has features I didn’t even know I wanted. But is it useful? Debatable.

What made this frustrating was the disconnect between effort and outcome. I spent days building this complex system, following Claude Code’s instructions step by step, not fully understanding what each piece did. Then when I tried to actually use it, the infrastructure failed before I could even validate whether the complexity was worth it.

The server errors added insult to injury—imagine building a elaborate machine and then not being able to turn it on for 24 hours.

Key Takeaway

Building with Claude Code taught me a ton about development workflows, slash commands, agent systems, and API integrations. The learning was valuable even if the end product is questionable.

But it also reinforced something important: complexity isn’t always better. Sometimes a single well-crafted prompt beats an over-engineered solution. The meta-lesson here is the same one I keep learning—start simple, prove value, then add complexity if needed.

Building this tool at the end of my 52-week journey instead of the beginning is either: a) Perfect timing because I now know what I actually need, or b) Hilariously late because I’ve already done the hard work manually

I’m leaning toward (b)

Pro Tips for Beginners:

- Question the complexity: Before building an elaborate system, ask if a simple prompt would work just as well

- Understand before you build: Following AI instructions without understanding what you’re building can lead to over-engineering

- Test early and often: Don’t wait until you’ve completed 30 steps to see if the thing actually works

- Consider the total cost: $44 + several days of work vs. using existing tools—do the math

Want to Try It Yourself?

If you want to experiment with Claude Code:

- Get free credits (I had $250 to test with)

- Start with simpler projects than I did

- Expect server hiccups and infrastructure issues

- Have a backup plan when things break

If you just want to generate experiment ideas:

- Use ChatGPT or Claude with a single prompt

- It’s faster, cheaper, and probably just as good

What’s Next?

I have this elaborate tool sitting on GitHub that I may or may not use. It’s functional, but I’m not convinced it’s better than my original workflow of Google Sheet and thinking of problems I run into.

The real value was learning about Claude Code’s capabilities—slash commands, sub-agents, hooks, MCPs, and the whole ecosystem. Whether I needed to build this specific tool to learn those things is another question entirely.